This is the first blog post for NanoMatriX’s AI Governance Series.

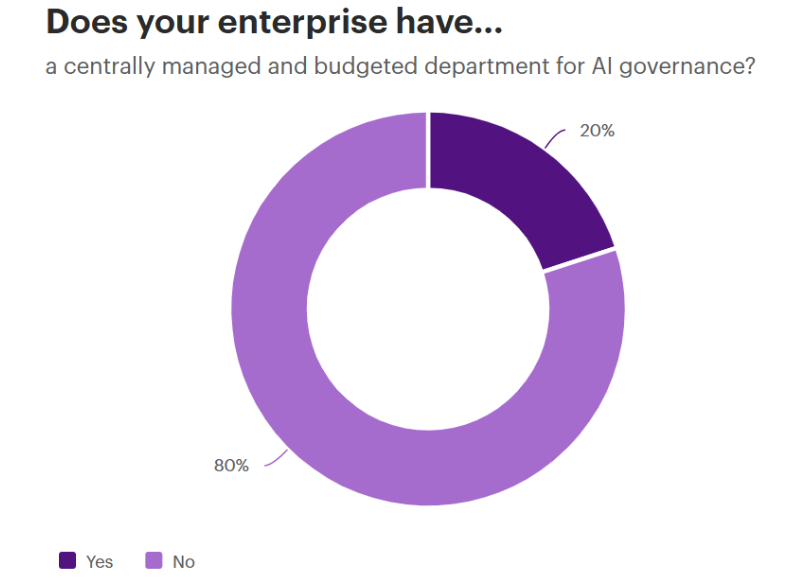

As artificial intelligence becomes important for business operations, companies face the challenge of using it responsibly. McKinsey report shows that 60% of businesses now integrate AI into their processes, but only 20% have governance frameworks to manage their risks. While AI can drive innovation and efficiency, it raises concerns about fairness and transparency.

Leading companies understand that managing these challenges requires more than just technology. It calls for strong governance practices. Sustainable AI governance makes sure that AI systems are not only efficient but also ethical and aligned with long-term goals.

Let’s explore how forward-thinking companies implement strategies to build trust and promote responsible AI use.

Understanding Sustainable AI Governance

Sustainable AI governance is a framework that helps companies ensure that their AI systems are ethical and accountable. It goes beyond meeting immediate business goals and focuses on long-term impact. Governance makes sure that AI decisions are fair and protects user privacy. They also help to minimize biases in data and algorithms.

The key principles of sustainable AI governance include:

- Fairness: Making sure AI treats all individuals and groups equally without discrimination.

- Transparency: Making AI processes understandable for stakeholders.

- Accountability: Holding teams and systems responsible for their decisions.

- Inclusivity: Considering diverse perspectives during AI development.

Companies can build trust with users and regulators by focusing on these principles. This approach ensures that AI remains aligned with societal and ethical values while supporting innovation.

The Need for Sustainable AI Governance

Without proper governance, AI systems can lead to serious issues. One major concern is bias in AI algorithms. If the data used to train these systems is biased, the outcomes can be unfair or discriminatory. For example, a hiring algorithm that favors certain demographics over others can reinforce existing inequalities.

Another risk is the invasion of privacy. AI systems often rely on large amounts of personal data. If companies do not have strong data protection measures, they may expose sensitive information to unauthorized access or misuse.

Real-World Case Study

Poor AI governance has led to notable failures. One example is the Dutch childcare benefit scandal, in which thousands of families were wrongly accused of fraud due to a flawed algorithm. This incident highlights the consequences of improper oversight and accountability in AI systems.

Best Practices in Implementing AI Governance

To implement sustainable AI governance, companies should follow several best practices. These practices help ensure that AI technologies are used responsibly and ethically. Here are some key strategies that leading organizations adopt:

1. Creating a Strong Governance Framework

A strong governance framework is important for overseeing AI initiatives. This framework should define roles and responsibilities for individuals involved in AI projects. It may include the establishment of oversight committees or ethics boards that monitor AI applications. These groups help to ensure that AI systems align with companies’ values and ethical standards.

2. Building Diverse and Inclusive Teams

Diversity in teams helps identify and address potential biases in AI systems. Companies can create fairer and more balanced AI models by including people with different backgrounds and perspectives.

3. Adopting AI Ethics Committees

Many companies have established AI ethics committees to oversee decision-making. These committees review AI projects to ensure they align with ethical principles and organizational values.

4. Adopting Advanced Monitoring Tools

Leading companies use advanced AI governance tools to monitor AI systems in real-time. These tools ensure that AI systems comply with evolving regulations and ethical standards. Automated monitoring systems can track how AI models behave in different scenarios and help to identify biases or undesirable behaviors early.

5. Collaboration with Industry and Regulators

Leading companies work closely with regulators and industry groups to shape the future of AI governance. These partnerships help them stay ahead of changing regulations and adopt best practices.

By adopting these strategies, organizations can manage the complexities of AI governance efficiently.

Multi-Layered Governance Approaches

A multi-layered governance approach can help companies to manage the complexities of AI technologies. This method involves different levels of oversight and regulation to make sure that AI systems are monitored efficiently.

Here are some key aspects of multi-layered governance:

- International Level: Companies can look to global frameworks and guidelines at the international level. Bodies like the European Union are developing regulations to guide the ethical use of AI. These regulations aim to create a common standard that companies can follow. This promotes fairness and accountability across borders.

- National Level: Each country may have its own laws and regulations regarding AI. National governments often set guidelines that organizations must adhere to. These laws can cover data protection, privacy rights, and ethical considerations in AI development. Businesses need to stay informed about these regulations to ensure compliance.

- Local Level: Local governance is also important. Organizations should consider the specific needs and concerns of their communities. Engaging with local stakeholders helps identify unique challenges related to AI use. This localized approach ensures that AI systems address community values and needs.

Role of Ethics Boards and Compliance Officers

Many businesses establish ethics boards and appoint compliance officers to implement a multi-layered governance approach. Ethics boards consist of diverse members who evaluate AI projects from various perspectives. They help ensure that ethical standards are met throughout the development process.

Compliance officers can also monitor adherence to laws and internal policies. They conduct regular audits and assessments to identify any potential risks or violations. This oversight helps businesses maintain accountability in their AI practices.

Case Studies from Leading Organizations

Many companies are already leading the way in sustainable AI governance. Here are a few examples:

Google has developed a robust AI ethics framework and established an AI ethics board to guide the company’s decision-making process. This board ensures that AI technologies developed by Google are aligned with ethical guidelines and that potential biases are identified and mitigated.

IBM

IBM’s AI governance framework emphasizes transparency and fairness. The company provides transparency reports on how its AI models operate and includes tools for customers to audit AI algorithms. Additionally, IBM has been vocal about its commitment to building AI systems that are accountable and explainable.

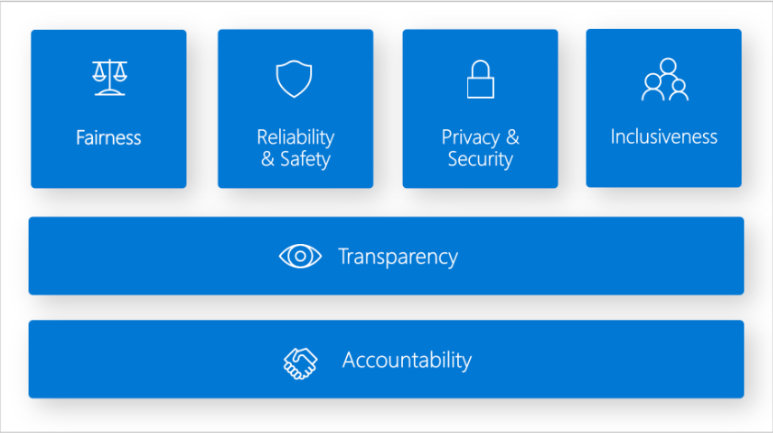

Microsoft

Microsoft’s Responsible AI principles include fairness, privacy, and inclusiveness. The company has invested heavily in AI governance, developing tools that empower businesses to monitor their AI systems and ensure compliance with global standards.

These companies have demonstrated that sustainable AI governance is achievable and essential for maintaining ethical and operational standards in the face of AI’s rapid growth.

Challenges in Implementing Sustainable AI Governance

Implementing sustainable AI governance is not without its difficulties. These challenges include:

- Inconsistent Regulations: Many organizations face challenges due to the fast pace of AI development and the lack of clear regulations. Without universal standards, it can be hard to ensure consistency in how AI systems are governed.

- AI Bias: Addressing bias in AI systems is another issue. Algorithms often reflect the biases in their training data that may lead to unfair outcomes. Identifying and fixing these biases need ongoing effort and diverse input.

- Increasing Risks: Balancing innovation with ethical practices is another obstacle. Companies want to use AI to stay competitive. However, rushing development can lead to systems that lack proper safeguards. This increases the risk of unintended consequences.

- Data Privacy: Data privacy is also a pressing issue. AI systems often need large amounts of data, raising concerns about how this information is collected, stored, and used. Ensuring compliance with data privacy laws adds another layer of complexity.

- Accountability: Who is responsible when an AI system fails or causes harm? Defining accountability in AI systems—both for developers and end-users is important for governance.

Despite these challenges, organizations prioritizing sustainable AI governance can overcome these hurdles.

Transform Your AI Governance with NanoMatriX

Effective AI governance is more important than ever with evolving technologies. Companies should make sure that their AI systems are not only efficient but also ethical and responsible.

This is where NanoMatriX comes in. NanoMatriX offers a specialized AI Governance Course to empower your team with the essential knowledge and skills to implement robust governance frameworks. This course covers important topics such as ethical AI practices, risk management, compliance with regulations, and strategies for continuous monitoring.

By enrolling in the NanoMatriX AI Governance Course, you will:

- Gain Expert Insights: Learn from industry leaders who bring real-world experience and best practices to the table.

- Enhance Your Team’s Skills: Equip your team with the tools necessary to deal with the complexities of AI governance.

- Promote Accountability: Develop a culture of responsibility within your company that prioritizes ethical AI use.

Don’t leave your AI governance to chance. Take proactive steps to ensure your company is prepared for the challenges ahead.

Ready to elevate your AI governance practices? NanoMatriX’s AI Governance Course is perfect for organizations looking to build or enhance their AI governance practices. Enroll now to learn more about AI Governance.

Read the second blog post for NanoMatriX’s AI Governance Series here.

About NanoMatriX Technologies Limited

NanoMatriX is a specialist in providing document and brand protection solutions. To solve our customer’s problems, we provide the following product and service categories:

- Brand-/document protection platforms

- Custom Software development

- Cybersecurity services

- Anti-counterfeiting products

- Consulting services

The competitive advantages of NanoMatriX are:

- Two decades of experience helping brand owners and government agencies fight product and document crime worldwide.

- A unique combination of rare-to-find skills in linking physical overt, covert, and forensic security features with secure digital features.

- Proven rigorous application of top cyber security and data privacy protection standards.

- Multi-lingual, multi-cultural, and collaborative corporate culture.

NanoMatriX Technologies Limited is committed to the highest standards of cyber security, data privacy protection, and quality management. Our systems are certified and compliant with leading international standards, including:

- ISO 27001: Ensuring robust Information Security Management Systems (ISMS).

- ISO 27701: Upholding Privacy Information Management Systems (PIMS) for effective data privacy.

- ISO 27017: Implementing ISMS for cloud-hosted systems, ensuring cybersecurity in cloud environments.

- ISO 27018: Adhering to PIMS for cloud-hosted systems, emphasizing privacy in cloud-hosted services.

- ISO 9001: Demonstrating our commitment to Quality Management Systems and delivering high-quality solutions.

Recent Comments